Tornado Cash Intelligent Demixer: Transaction Attribution Through Behavioral Analysis

What if we can build an algorithm, that matches deposits to withdrawals in a tornado cash trasaction? Doesn't that break the whole privacy aspect that it promises? This tutorial build a POC trying to match deposits-withdrawals using a 4-point scoring system.

I received some valuable feedback for this POC in my linkedin post, which I plan to implement soon.

In the spirit of literate programming, this article will have the code files linked in raw format. Just as Donald Knuth's programs weave together code and explanation, we'll explore how to match deposits to withdrawals, detect behavioral patterns, and identify network connections in privacy-preserving transactions.

Repository: tornado-cash-intelligent-demixer

Overview

Tornado Cash is a privacy-preserving protocol that breaks the link between deposit and withdrawal addresses using zero-knowledge proofs. However, privacy can be compromised through behavioral patterns, timing analysis, and network graph analysis. This tool demonstrates how to:

- Match deposits to withdrawals using temporal and value-based heuristics

- Detect address reuse patterns that compromise privacy

- Analyze relayer behavior and fee structures

- Track nullifier usage to detect potential double-spends

- Build network graphs to identify connected addresses

Architecture

The system consists of four main components:

1. Data Fetching Layer

The afetch.py module handles all interactions with the Bitquery GraphQL API. It retrieves both transfer events and contract events (Deposit/Withdrawal) to build a complete picture of Tornado Cash activity.

@dataclass

class TornadoTransaction:

"""Represents a Tornado Cash transaction"""

tx_hash: str

from_address: str

to_address: str

value: str

block_time: str

gas: int

call_signature: str

transaction_type: str # 'deposit' or 'withdraw'

commitment: str = None # bytes32 from Deposit event

nullifier: str = None # bytes32 from Withdrawal event

recipient: str = None # address from Withdrawal event

relayer: str = None # address from Withdrawal event

fee: str = None # uint256 from Withdrawal event

The BitqueryFetcher class provides two primary methods:

get_deposits_and_withdrawals_via_transfers(): Captures all transfers to/from Tornado Cash contractsget_deposit_events()/get_withdrawal_events(): Retrieves specific contract events for detailed analysis

2. Configuration Management

The config.py module maintains:

- OFAC-sanctioned contract addresses across multiple networks (Ethereum, Polygon, BSC)

- Pool denominations mapping contract addresses to their pool sizes (0.1 ETH, 1 ETH, 10 ETH, 100 ETH)

- Analysis parameters like time tolerance windows and value matching thresholds

# Default analysis configuration

DEFAULT_TIME_TOLERANCE_SECONDS = 7200 # 2 hours

DEFAULT_NETWORK_WINDOW_DAYS = 14

VALUE_TOLERANCE_PERCENT = 0.01 # 1% tolerance for matching amounts

3. Scoring Algorithm

The scoring.py module implements the matching heuristics. The core function calculate_match_score() combines multiple signals:

def calculate_match_score(

time_diff_seconds: float,

tolerance_seconds: float,

deposit_value: Optional[float],

withdrawal_value: Optional[float],

same_contract: bool,

same_pool: bool,

) -> float:

"""Lower scores indicate better matches"""

time_score = time_diff_seconds / tolerance_seconds

amount_score = abs(deposit_value - withdrawal_value) / deposit_value

contract_bonus = 0.0 if same_contract else 0.3

pool_bonus = 0.0 if same_pool else 0.5

return time_score + amount_score + contract_bonus + pool_bonus

Scoring factors:

- Time proximity: Closer transactions score better

- Amount similarity: Matching values reduce the score

- Contract match: Same contract address reduces score by 0.3

- Pool match: Same pool denomination reduces score by 0.5

4. Core Analysis Engine

The tornado_analyzer.py module orchestrates the analysis. The TornadoCashAnalyzer class provides several key methods:

Matching Deposits to Withdrawals

The match_deposits_withdrawals() method implements a greedy matching algorithm:

def match_deposits_withdrawals(

self,

tolerance_seconds: int = 1209600, # 2 weeks

value_tolerance_percent: float = 0.05

) -> List[Dict]:

"""One-to-one matching using greedy algorithm with scoring"""

# Generate all candidate pairs

candidates = []

for deposit in self.deposits:

for withdrawal in self.withdrawals:

if withdrawal_time > deposit_time:

if time_diff <= tolerance_seconds:

score = calculate_match_score(...)

candidates.append({...})

# Sort by score and greedily match

candidates.sort(key=lambda x: x['score'])

matches = []

matched_indices = set()

for candidate in candidates:

if candidate['deposit_idx'] not in matched_indices:

matches.append(candidate)

matched_indices.add(...)

return matches

Matching constraints:

- Withdrawal must occur after deposit

- Time difference within tolerance window (default: 2 weeks)

- Amounts match within tolerance (accounts for relayer fees)

- One-to-one matching (each deposit/withdrawal matched at most once)

Address Reuse Detection

Privacy is compromised when addresses appear in multiple transactions:

def find_address_reuse(self, transactions: List[TornadoTransaction]) -> Dict[str, int]:

"""Find addresses appearing in multiple transactions"""

address_counts = Counter()

for tx in transactions:

address_counts[tx.from_address] += 1

address_counts[tx.to_address] += 1

return {addr: count for addr, count in address_counts.items() if count > 1}

Network Pattern Analysis

The analyze_network_patterns() method builds temporal graphs to identify connected addresses:

def analyze_network_patterns(self, window_days: int = 14) -> Dict:

"""Analyze connections between addresses within time windows"""

# Group transactions by time windows

# Build adjacency lists for addresses

# Identify clusters and patterns

Relayer Analysis

Relayers facilitate withdrawals by paying gas fees. Analysis includes:

- Relayer usage patterns: Which relayers are most active

- Fee structures: How much relayers charge

- Recipient diversity: How many unique addresses each relayer serves

def analyze_relayers(

self,

contract_addresses: List[str],

limit: int = 1000,

network: str = "eth"

) -> Dict:

"""Analyze relayer behavior and patterns"""

withdrawals = self.get_withdrawal_events(...)

relayer_stats = defaultdict(lambda: {

'count': 0,

'total_fees': 0,

'recipients': set()

})

# Aggregate statistics by relayer address

Nullifier Analysis

Nullifiers prevent double-spending. The analysis tracks:

- Nullifier reuse: Potential double-spend attempts

- Nullifier patterns: Timing and frequency of nullifier usage

def analyze_nullifiers(

self,

contract_addresses: List[str],

limit: int = 1000,

network: str = "eth"

) -> Dict:

"""Analyze nullifier usage patterns"""

withdrawals = self.get_withdrawal_events(...)

nullifier_counts = Counter(tx.nullifier for tx in withdrawals)

# Detect potential double-spends

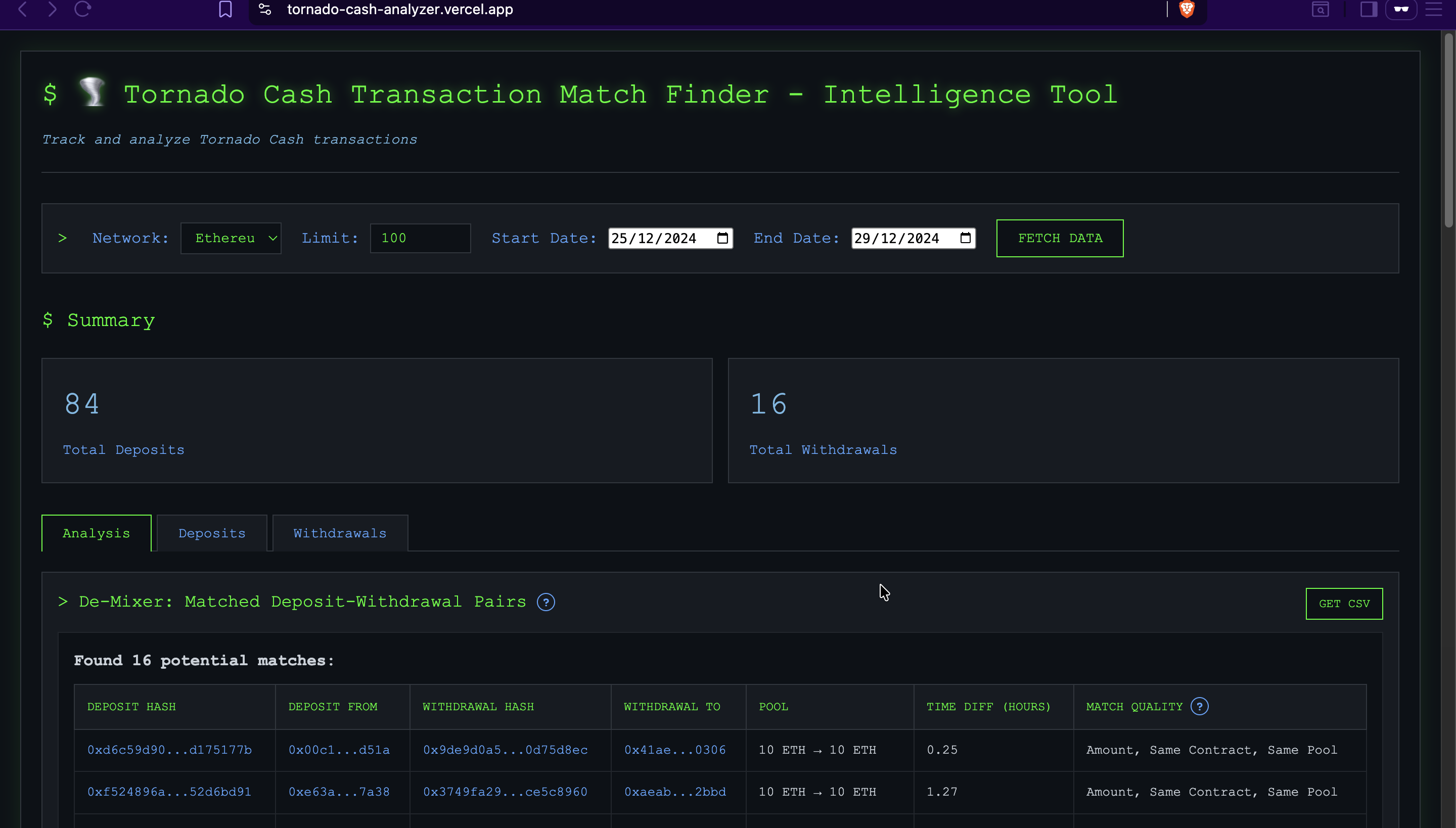

5. Web Interface

The app.py module provides a Flask-based web UI with several endpoints:

/api/fetch: Fetch deposits, withdrawals, and analysis/api/summary: Get summary statistics without full data/api/deposits: Lazy-load deposit data/api/withdrawals: Lazy-load withdrawal data/api/relayer-nullifier-analysis: Heavy analysis endpoint/api/matched-pairs.csv: Export matched pairs as CSV

The UI displays:

- Deposit and withdrawal tables

- Matched pairs with confidence scores

- Address reuse patterns

- Timestamp analysis (daily/hourly activity)

- Network pattern visualizations

How De-Mixing Works

The de-mixing algorithm combines multiple heuristics:

1. Temporal Analysis

Transactions occurring close in time are more likely to be related. The default tolerance is 2 weeks, but this can be configured.

2. Value Matching

Withdrawals should match deposit amounts (minus relayer fees). The algorithm allows a 5% tolerance to account for:

- Relayer fees

- Gas costs

- Rounding differences

3. Contract/Pool Matching

Transactions using the same contract or pool denomination are more likely to be related. The scoring algorithm heavily weights this factor.

4. Behavioral Patterns

- Address reuse: If an address appears in multiple transactions, it's likely controlled by the same entity

- Timing patterns: Clusters of activity suggest coordinated behavior

- Network connections: Addresses that interact within time windows may be related

5. Greedy Matching Algorithm

The algorithm uses a greedy approach:

- Generate all candidate deposit-withdrawal pairs

- Score each candidate using the heuristics

- Sort candidates by score (lower is better)

- Greedily match pairs, ensuring one-to-one correspondence

Usage Example

from tornado_analyzer import TornadoCashAnalyzer

import config

# Initialize analyzer

analyzer = TornadoCashAnalyzer(

oauth_token="your_bitquery_token",

network="eth"

)

# Fetch transactions

contracts = config.get_tornado_cash_addresses("eth")

analyzer.get_deposits(contracts, limit=1000)

analyzer.get_withdrawals(contracts, limit=1000)

# Match deposits to withdrawals

matches = analyzer.match_deposits_withdrawals(

tolerance_seconds=1209600, # 2 weeks

value_tolerance_percent=0.05

)

# Analyze patterns

reused_addresses = analyzer.find_address_reuse(

analyzer.deposits + analyzer.withdrawals

)

network_patterns = analyzer.analyze_network_patterns(window_days=14)

# Generate report

report = analyzer.generate_report(contracts, limit=1000, network="eth")

Key Insights

Privacy Compromises

- Address Reuse: Using the same address for multiple deposits/withdrawals links transactions

- Timing Patterns: Rapid deposits and withdrawals suggest coordinated activity

- Amount Patterns: Using exact same amounts across transactions creates patterns

- Relayer Usage: Consistent relayer usage can link transactions

Limitations

- False Positives: Matches are probabilistic, not deterministic

- Time Windows: Configurable tolerance may miss legitimate matches

- Network Effects: External factors (market conditions, gas prices) affect timing

- Zero-Knowledge Proofs: The cryptographic privacy guarantees remain intact; this tool analyzes metadata

Conclusion

This tool demonstrates how behavioral analysis can reveal patterns in privacy-preserving protocols. While Tornado Cash's cryptographic guarantees remain strong, metadata analysis can provide insights for:

- Compliance: Identifying potentially sanctioned transactions

- Research: Understanding usage patterns and privacy practices

- Education: Demonstrating privacy trade-offs in blockchain systems

The codebase follows literate programming principles, with each module clearly documented and linked. The implementation is modular, allowing researchers and developers to extend the analysis with additional heuristics or integrate it into larger systems.

Explore the code:

tornado_analyzer.py- Core analysis enginescoring.py- Matching heuristicsafetch.py- Data fetching layerapp.py- Web interfaceconfig.py- Configuration

Repository: tornado-cash-intelligent-demixer